You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Crop factor X5 VS Full Frame

- Thread starter airdrone

- Start date

I think its more like 2.4, so your stock 15mm becomes like 34mm-35mm which for me is way too zoomed it for general video-good for pictures though-, I much prefer something around 24mm for aerial video, I have the olympus 12mm which is much nicer for video, as although its 12mm, that equates to about 7-8mm wider FOV (over the stock 15mm) when filming and it makes a big diff. The olympus 12mm ends up about 28mm I think.

I just want the camera firmware to be stable and the weather to change. We're very much beta tester atm I'm afraid. Still, its fun to be a part of in some ways!

I just want the camera firmware to be stable and the weather to change. We're very much beta tester atm I'm afraid. Still, its fun to be a part of in some ways!

- Joined

- Aug 2, 2014

- Messages

- 62

- Reaction score

- 13

Olympus 25mm installed on X5 is more similar to what the eye sees. Is not that right?I think its more like 2.4, so your stock 15mm becomes like 34mm-35mm which for me is way too zoomed it for general video-good for pictures though-, I much prefer something around 24mm for aerial video, I have the olympus 12mm which is much nicer for video, as although its 12mm, that equates to about 7-8mm wider FOV (over the stock 15mm) when filming and it makes a big diff. The olympus 12mm ends up about 28mm I think.

I just want the camera firmware to be stable and the weather to change. We're very much beta tester atm I'm afraid. Still, its fun to be a part of in some ways!

Last edited:

- Joined

- Aug 2, 2014

- Messages

- 62

- Reaction score

- 13

Depends what mode you shoot in.

In terms of photo the MFT format is considered a 2x crop factor, but as in some video modes only smaller crops of the sensor are taken these would be more.

Or what lens do I need in X5 two get the same angle as on my sony a7 r2 where I film in super 35mm with 35mm lens. Have forstådt it so That filming with sony a7 r2 with 35mm lens will be equivalent to a 50mm full frame. it will say That on my X5 I need When a 25mm lens. Is that correct?

- Joined

- Feb 3, 2015

- Messages

- 111

- Reaction score

- 57

As said, depends on the resolution you shoot in.

DJI Zenmuse X5 and X5R: 4K Micro Four Thirds cameras for Inspire 1 drone with D-Log and RAW recording

DJI Zenmuse X5 and X5R: 4K Micro Four Thirds cameras for Inspire 1 drone with D-Log and RAW recording

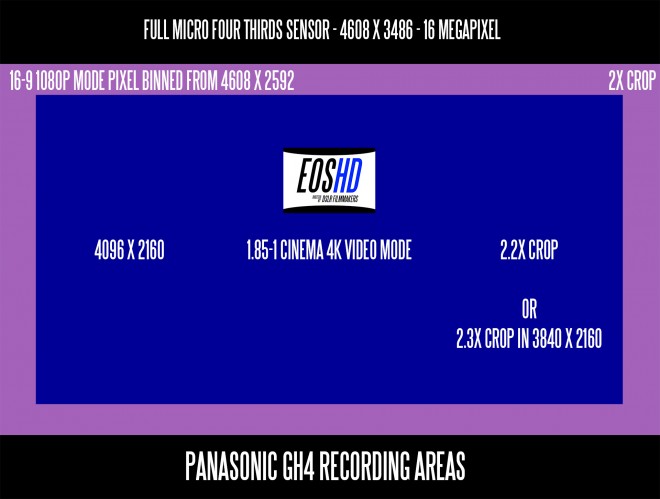

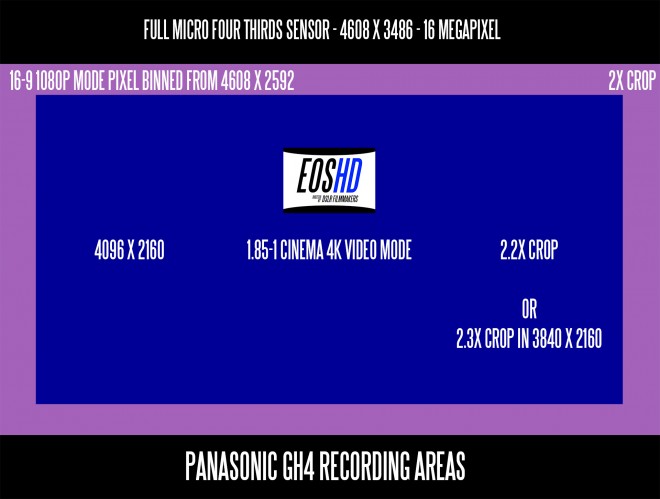

The sensor features a 2x crop when shooting 1080p and a 2.3x crop in 4K – the same crop factors as found on a Panasonic GH4.

- Joined

- Jul 6, 2014

- Messages

- 314

- Reaction score

- 147

This image illustrates the crop modes of the Panasonic GH4, which uses a very similar 16MP MFT sensor:

The sensor areas:

The follow-up question: "But for 1080 video, they sample more pixels and combine them; why wouldn't they do the same for 4K?" The answer: for 1080 video, each pixel in the image is produced by combining 2.4 pixels by 2.4 pixels. In other words, each pixel in the final image is derived from about 6 photosites on the sensor. But in 4K mode, there just aren't enough pixels to do this; it could only use 1.2 x 1.2 pixels. There isn't enough data to benefit from combining, so they don't, and the resulting image is superior.

The natural next question: "Why don't they use a sensor that has twice the number of pixels as 4K video requires, and oversample those pixels to combine them into a 4K video image?" The answer; that's a 36 megapixel sensor, which would be much much much more expensive, and would require much much much faster hardware to decode. So wait about 5 years, and that's what we'll all be flying.

The sensor areas:

- Black (4608 x 3486) is the full 16MP sensor, used only for photos. This is a 2.0x crop.

- Magenta (4608 x 2592) is the full width of the sensor, but the top and bottom bands are not used, in order to get the 16x9 HD image ratio. This is used for 1920 x 1080 (HD) video. This is a 2.0x crop.

- Blue (4096 x 2160, or 3840 x 2160) is the part of the sensor used for 4K video. The crop mode is either 2.2x crop or 2.3x crop.

The follow-up question: "But for 1080 video, they sample more pixels and combine them; why wouldn't they do the same for 4K?" The answer: for 1080 video, each pixel in the image is produced by combining 2.4 pixels by 2.4 pixels. In other words, each pixel in the final image is derived from about 6 photosites on the sensor. But in 4K mode, there just aren't enough pixels to do this; it could only use 1.2 x 1.2 pixels. There isn't enough data to benefit from combining, so they don't, and the resulting image is superior.

The natural next question: "Why don't they use a sensor that has twice the number of pixels as 4K video requires, and oversample those pixels to combine them into a 4K video image?" The answer; that's a 36 megapixel sensor, which would be much much much more expensive, and would require much much much faster hardware to decode. So wait about 5 years, and that's what we'll all be flying.

Similar threads

- Replies

- 2

- Views

- 446

- Replies

- 0

- Views

- 328

- Replies

- 34

- Views

- 1K

- Replies

- 3

- Views

- 1K

- Replies

- 1

- Views

- 710